Conquering complexity in the Go world: Monoliths, Microservices and Tools

Complexity is the ultimate enemy and there exists no silver bullet. No neat solution in small details can safe us. Understanding the big picture and getting all the necessary context is usually the hard part. Of course it helps that simplicity is the north star in Go. And proverbs like ‘do less - enable more’ can be really useful. Now lets see how this all scales up.

Monoliths

In the old days we had only monoliths with one deployable unit per system. Ops insisted on this and it has never been questioned by anyone. Unfortunately these systems grew without bounds and usually contained a lot of spaghetti code that converts any project slowly but surly into a big ball of mud.

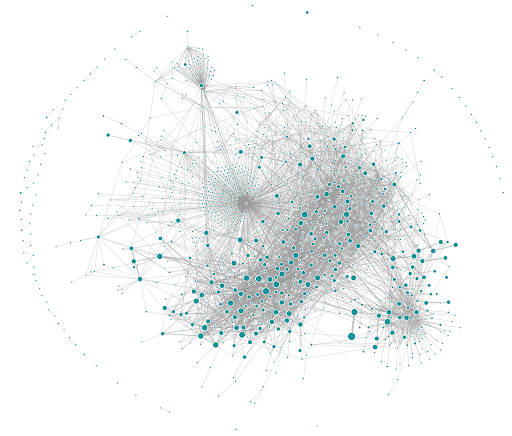

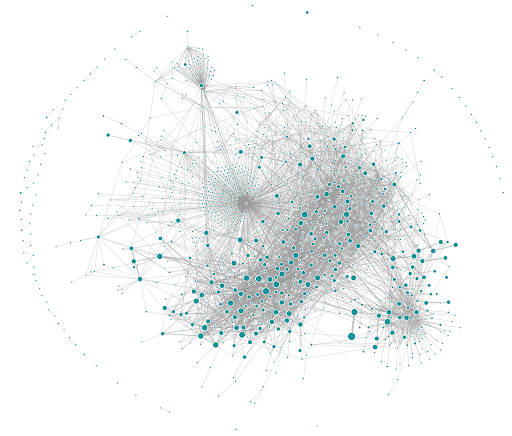

A typical class diagram for such a monolith looks something like this:

We can see some nice parts at the outer edges. But there is a crazy mess in the middle. Well, it is just too simple to reuse something quickly anywhere in the system. And the DRY principle (Don’t Repeat Yourself) aids to that.

The simplicity we strive for with Go and its proverbs can be of help but they don’t prevent such a mess by themself.

Programming Languages And Monoliths

Most of those monoliths have been written in Java or C#. For working with such a monoliths developers are using powerful IDEs like IntelliJ or Visual Studio respectively. Back in my own Java days I have experienced the power of these tools and can confirm that working effectively on such a monolith wouldn’t be possible without such IDEs. Thankfully we can have similar support for Go.

With these IDEs you can perform at least the following relevant tasks:

- Structural analysis

- Renaming classes, methods, variables…

- Moving classes, methods

- Extract methods, split up classes

Of course big companies build their own specialized tools. Especially Google is famous for that. And so it is very hard to replicate their success stories without the same amount of resources.

But the programming language itself has more influence on the work with big code bases. Java and C# both use especially classes but also packages for encapsulation. Circular dependencies between classes are allowed in both languages. And so are circular dependencies between packages. Go on the other hand doesn’t support something like classes for encapsulation at all. But packages are supported very much and circular dependencies between packages are prevented by the compiler. This allows the developer to always find a straight path from the main package to any other package in the system. So I think that Go scales better for big code bases than Java and C#.

In recent years micro services have come to break up monoliths for ever. Let’s see how well that scales.

Microservices

Now we have micro services everywhere. That can result in 1 big system with 1,000 deployable units. This just seems to be the new mantra and DevOps and cloud advocates seem to insist on it. So big systems still grow without bounds and often have a spaghetti architecture.

The reasons for spliting services are many. One part mustn’t take down another one is a really good reason. If parts have to scale differently that is another good reason If parts are really independent they should be kept apart, too. But often a service is split to prevent spaghetti code or even just because it is so cool or everybody is doing it.

Many very small services can also result from developers wanting to use the latest cool stuff because small services are much better for technical experiments. Another known driver for many services and overall complexity is the CQRS architectural pattern.

A good example of such an architecture can be seen in the following service diagram from Uber:

Here I have to apologize because I sold you this image as a class diagram before. But the truth is that there are no public class diagrams of monoliths available because they are just too messy and useless.

Micro services are completely independent of each other and usually just held together by some kubernetes configuration files. These are very hard to analyze and refactor since no shiny IDE with proper tooling exists for this.

So finding providers and especially users of data is hard in a micro service architecture. Updating a dependency for 1,000 microservices is even harder. So this is rarely done and often the versions drift apart. This makes it very difficult to unite services later.

Moving functionality to other services is tedious as there are no tools available and you have to rely on copy, paste and tests.

Cutting services in a different way is almost impossible. Unfortunately I have never seen a company who has done this right the first time around. No matter how clever or high profile the engineers.

So in the end we have a similar complexity as in a monolith but without any tooling for handling it.

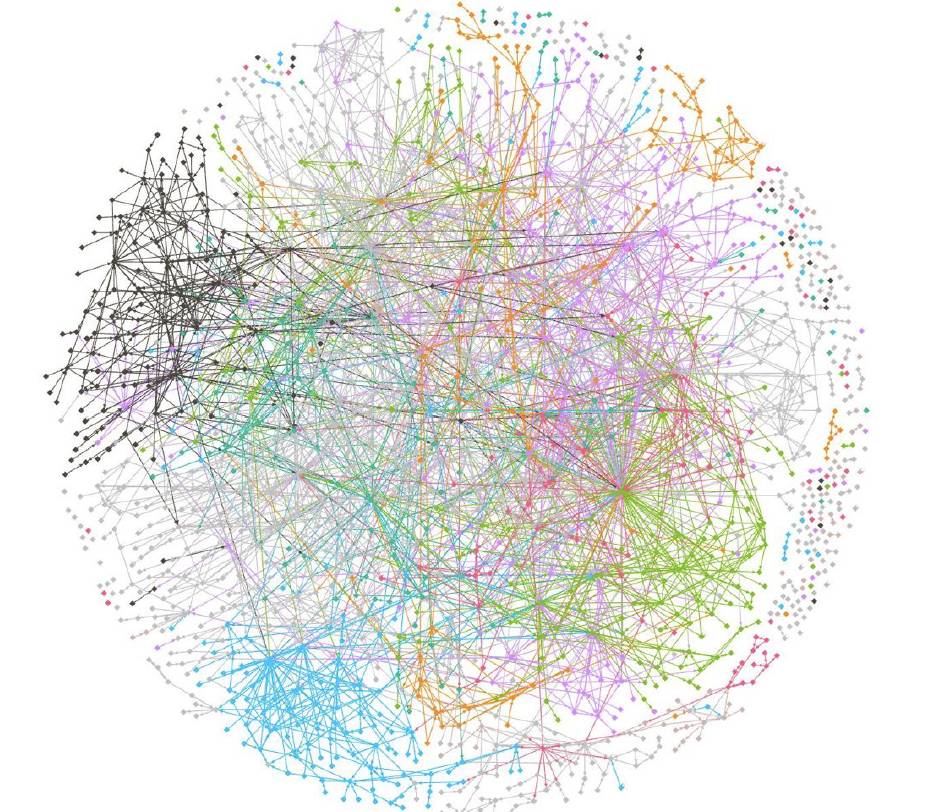

Another classic example of a micro service diagram it the one from Monzo:

Even though the relationships between services are coloured this even more difficult to understand and work with.

Intermediate Results

It seems that we just have to live with some sort of spaghetti and can just choose it’s form.

Within a single code base it is much easier to move functionality around and analyze the structure than between multiple repositories. Since Go is a typed programming language with great support for tooling this can often be automated.

So this leaves the following very good reasons for splitting up services:

- Some part of a service has to scale much differently than another part. E.g.: A part open to the public versus a part used only by internal users.

- A failure in some parts of the service should not cause a failure of other parts. E.g.: A rather simple part open to the public shouldn’t be effected by a failure in a complex part used only by internal users.

If those parts still share a lot of domain logic and context it often makes sense to implement them in the same repository as a single Go module. So we have multiple executables but still a single code base.

If parts don’t share much it is of course an option to seperate them into multiple Go modules or even repositories. Especially if the parts are rather large.

Unfortunately monorepos aren’t a silver bullet either because there are so many people working on it at the same time. This makes merging a pull request (PR) quite difficult. If the structure within the repository isn’t really great there is (almost) always somebody else merging their PR before we manage to get ours in. And we end up handling another set of merge conflicts.

In the end there is no way around a sound code structure and it seem to be much better to handle the complexity with tools than without.

Conquering Complexity In A Single Code Base With Tools

We have seen the power of IDEs in Programming Languages And Monoliths. Here we look only at Go code bases and special tools.

Fortunately we have some advanteges in the Go world. Go has been engineered with scalability in mind. And packages are made for encapsulating functionality. Finally dependency cycles between packages aren’t possible.

So we only have to take care of unwanted dependencies between packages without having to check for cycles. This can be done with a simple tool that can be used on the command line or in CI/CD pipelines. A tool that is fulfilling this purpose is the spaghetti-cutter.

The command line options are quite simple:

$ spaghetti-cutter --help

Usage of spaghetti-cutter:

-e don't report errors and don't exit with an error (shorthand)

-noerror

don't report errors and don't exit with an error

-r string

root directory of the project (shorthand) (default ".")

-root string

root directory of the project (default ".")

Additionally we need a simple .spaghetti-cutter.hjson configuration file at

the root of the code base you want to handle.

An empty file is good enough for a start.

Running it now at the root of a simple code base gives:

$ spaghetti-cutter -e

2022/12/16 22:16:13 INFO - configuration 'allowOnlyIn': .....

2022/12/16 22:16:13 INFO - configuration 'allowAdditionally': .....

2022/12/16 22:16:13 INFO - configuration 'god': `main`

2022/12/16 22:16:13 INFO - configuration 'tool': ...

2022/12/16 22:16:13 INFO - configuration 'db': ...

2022/12/16 22:16:13 INFO - configuration 'size': 2048

2022/12/16 22:16:13 INFO - configuration 'noGod': false

2022/12/16 22:16:13 INFO - no errors are reported: true

2022/12/16 22:16:13 INFO - root package: money

2022/12/16 22:16:13 INFO - Size of package '/': 365

2022/12/16 22:16:13 INFO - No errors found.

The default configuration values are reported and the size of all packages is reported.

So the -noerror option makes it easy to tweak the size configuration value.

A typical configuration file looks like this:

{

"tool": ["x/*"],

"db": ["store/*"],

"size": 1024,

"allowAdditionally": {

// this package is allowed in API tests so we can test with real data

"*_test": ["readdata"]

},

// document and restrict usage of external packages

"allowOnlyIn": {

"github.com/stretchr/testify**": ["*_test"]

}

}

Since the configuration file is in HJSON format, comments are allowed and encouraged.

tool packages aren’t allowed to import other packages in the project but can

be used everywhere except other tool packages.

db packages are allowed to import tool packages and other db packages but

nothing else.

They can be used in all packages except tool packages.

god packages can use everything. The default is main packages.

This should be good for almost all code bases but can be overridden.

All other packages are standard packages and can use all tool and db packages. But they can’t use each other by default or we would have a lot of spaghetti code again.

All of this can be overridden with allowAdditionally entries to allow more

dependencies and allowOnlyIn entries to enforce further restrictions.

For really big code bases variables can be used to keep the configuration concise and descriptive. It is the intention of the configuration file to serve as documentation for new team members.

With such a tool there is no upside left when splitting a code base just to prevent spaghetti code.

Conclusion

Even though there is no silver bullet different approaches lead to very different results in the long run. Unfortunately these results can’t be seen early on but rather when the “point of no return” is far behind. This makes these decisions so difficult and important. Unfortunately there are still a lot of articles out there that praise approaches that have been proven as problematic by now.

Fortunately it is possible to give good reasons for better approaches. Good luck with the discussions!